Last week’s post on Why keywords are no longer relevant to SEO produced more of a reaction than anticipated. Today I’ll show you how to adapt to the new reality.

In a sense, Google have done the SEO world a favour, by forcing us away from a flawed model. Even ignoring the (not provided) issue, the fact is that different users not only have different needs but also use different terms to find what they are looking for.

Consider an over-simplified example of a fictional company selling anti-virus software – we’ll call their product KillVir.

I’ve never worked for such a company, but it’s a reasonable assumption that they receive a large number of visitors searching for quite different and distinct terms, despite all being potentially interested in the same application.

Some of the searches might include the following terms:

Is my computer infected with a virus?

realtime malware protection

how do I protect my computer?

low resources virus protection

And many more.

The old SEO model might start by looking in the Analytics data to see (1) how much traffic the website is getting for each term and (2) which pages they are landing on. Neither of which are possible today.

What we can do, however, is to take a step backwards and look at each phrase separately. The people searching for each are all potentially interested in KillVir, but not only are they searching for different phrases, they are also demonstrating very different personas.

Is my computer infected with a virus is more likely to be non-technical, realtime malware protection appears to have a greater level of knowledge and understanding, how do I protect my computer is probably of a non-technical nature, while low resources virus protection is someone with above-average technical knowledge with very specific needs.

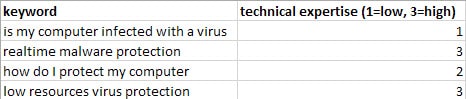

If we were to score the technical expertise for each query, it might look like this:

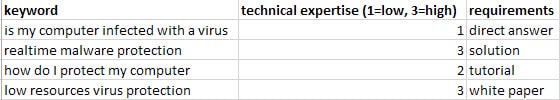

We can also add another layer of analysis, as the language used provides strong signals as to what the person searching is actually looking for or hoping to find:

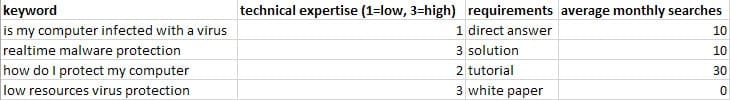

And here’s where the old methodology does still carry some weight. We can include how many searches there are for each term using Google’s keyword planner:

So even with this over-simplified example using only four keywords, we already have some useful information. One obvious opportunity might be to create some very focused content for each.

For example the page addressing is my computer infected with a virus shouldn’t be a general page about anti-virus software, and certainly shouldn’t be a sales page with one short section of text that barely addresses the question. It should instead be a detailed article outlining precise steps that the user could take to determine whether or not their machine was infected. There would be lots of illustrations, lots of information, the overall tone would be very non-technical, and there would very little by the way of blatantly pushing KillVir.

Once the page was complete, it should then be linked in from the rest of the website, added to the sitemap.xml and submitted to Google for indexing. At the same time it should be circulated freely via the likes of the company blog, Twitter, LinkedIn, Facebook and others as a useful resource. Why? Because it is genuinely a useful resource, and not just yet another thinly veiled sales page.

With a little time and a little luck, the page should receive some reasonable incoming links, and should eventually start showing up in Analytics as a landing page for organic traffic.

The website owner would never know which precise keywords were sending traffic from Google, but this no longer matters. If the SEO does their job correctly, we can then trust Google to send visitors who are looking for what the page provides. More importantly, these invisible terms would change over time, but the page would be no less relevant, and would therefore adapt to the new keywords.

It’s a basic illustration, but once you get your head around the new “content for intent” model, it can truly work wonders. Welcome to SEO of 2014.

Unique ideas for your business

The Demystifier puts practical ideas into your hands. You won't find them elsewhere. Original, actionable and insanely effective.